Paper Review - SIMPLER

- Evaluating Real-World Robot Manipulation Policies in Simulation

- 1. How should we evaluate policies trained on real robot data?

- 2. Potential SIMPLER way

- 3. How real and SIMPLER performance correlates?

- 4. Problem definition

- 5. Metrics

- 6. Challenges to building a real-to-sim evaluation system

- 7. Simulation setup

- 8. Investigations for simulation evaluations

- 9. Experiment setup

- 10. Results

- 11. Ablations

- 12. Conclusion

- 13. BibTex

Evaluating Real-World Robot Manipulation Policies in Simulation

Xuanlin Li*1, Kyle Hsu*2, Jiayuan Gu*1, Karl Pertsch†2,3, Oier Mees†3, Homer Rich Walke3, Chuyuan Fu4, Ishikaa Lunawat2, Isabel Sieh2, Sean Kirmani4, Sergey Levine3, Jiajun Wu2, Chelsea Finn2, Hao Su‡1, Quan Vuong‡4, Ted Xiao‡4

*Equal contribution †Core contributors ‡Equal advising

1UC San Diego, 2Stanford University, 3UC Berkeley, 4Google DeepMind

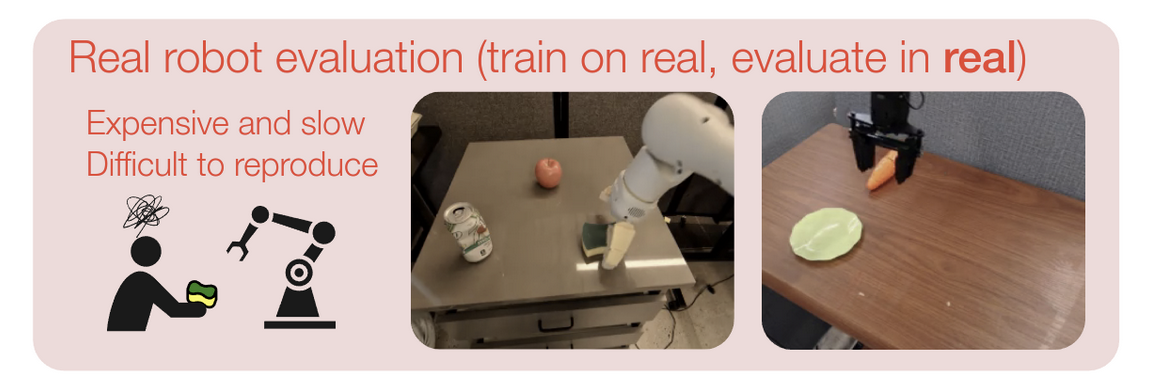

1. How should we evaluate policies trained on real robot data?

Generally, roboticist evaluate policies (trained on realworld) in realworld. However, there are some problems associated with it.

- People bump into cameras

- Gripper gets stuck

- Real-world evaluation is slow and tedious

- Difficult to reproduce experiments

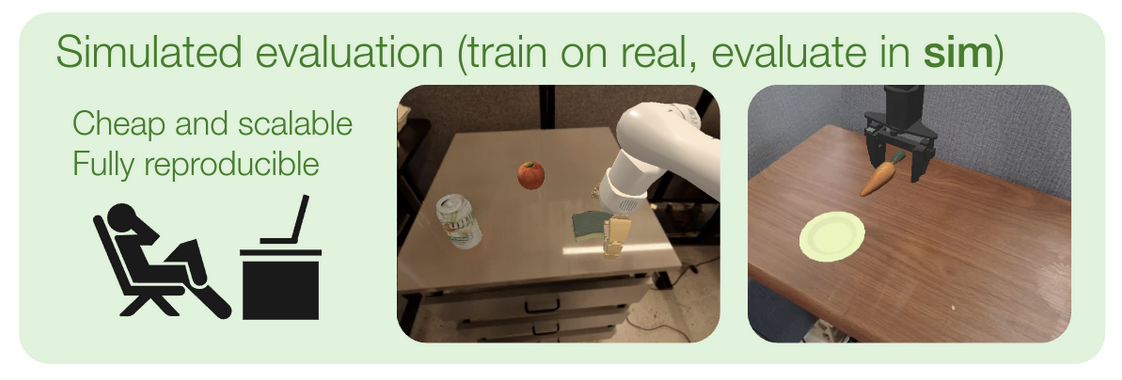

2. Potential SIMPLER way

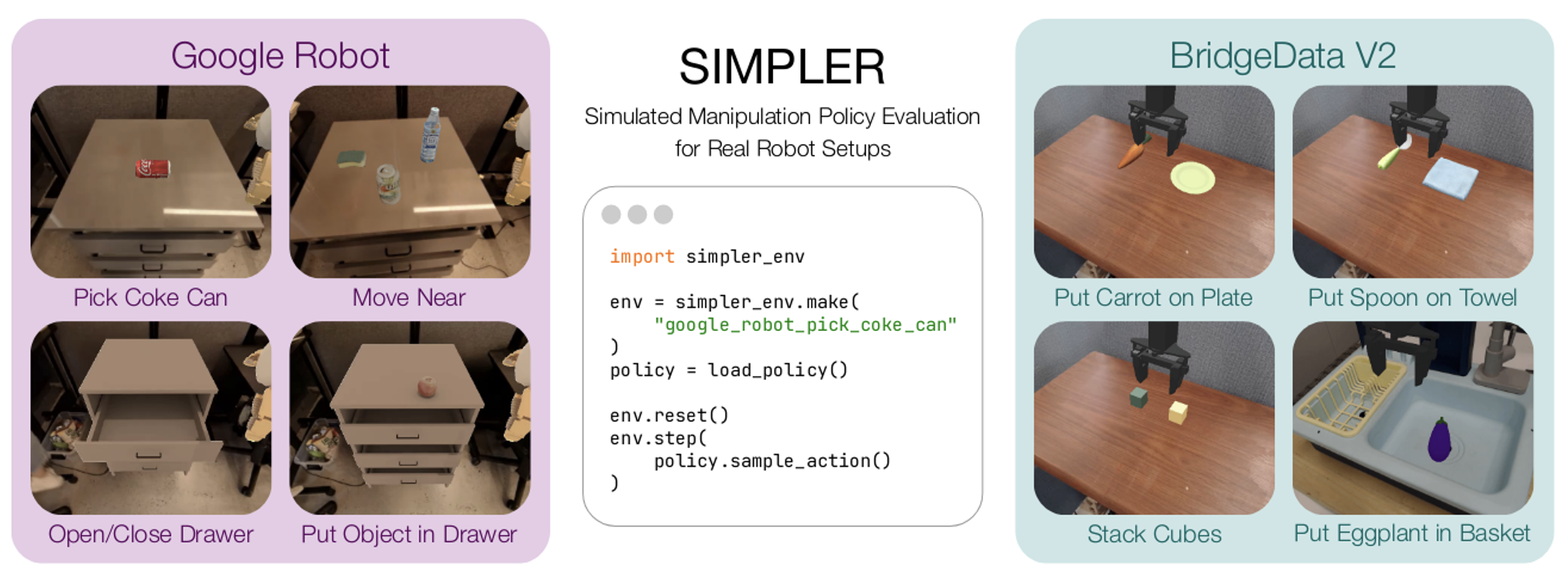

SIMPLER stands for SIMULATED MANIPULATION POLICY EVALUATION FOR REAL ROBOT SETUPS

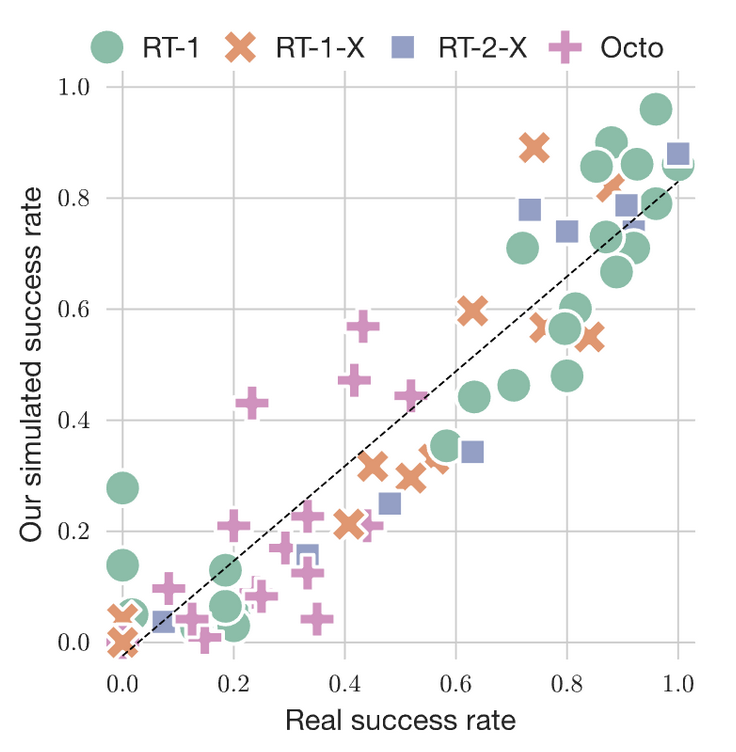

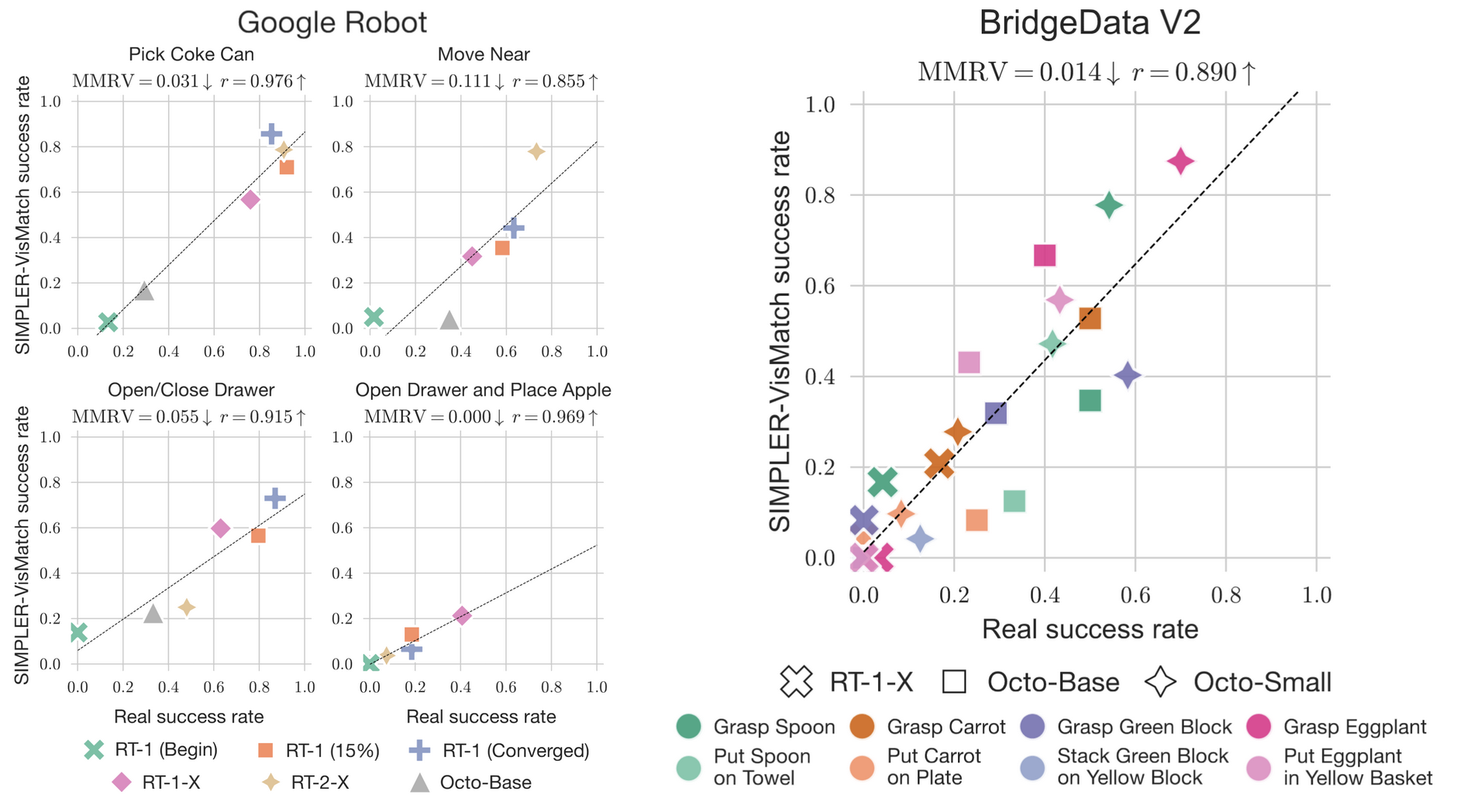

3. How real and SIMPLER performance correlates?

4. Problem definition

- NOT to obtain 1:1 reproduction of policies’ real-world behavior

- To guide policy improvement decisions

- Construct a simulator S with a strong correlation between relative performances in real and sim

5. Metrics

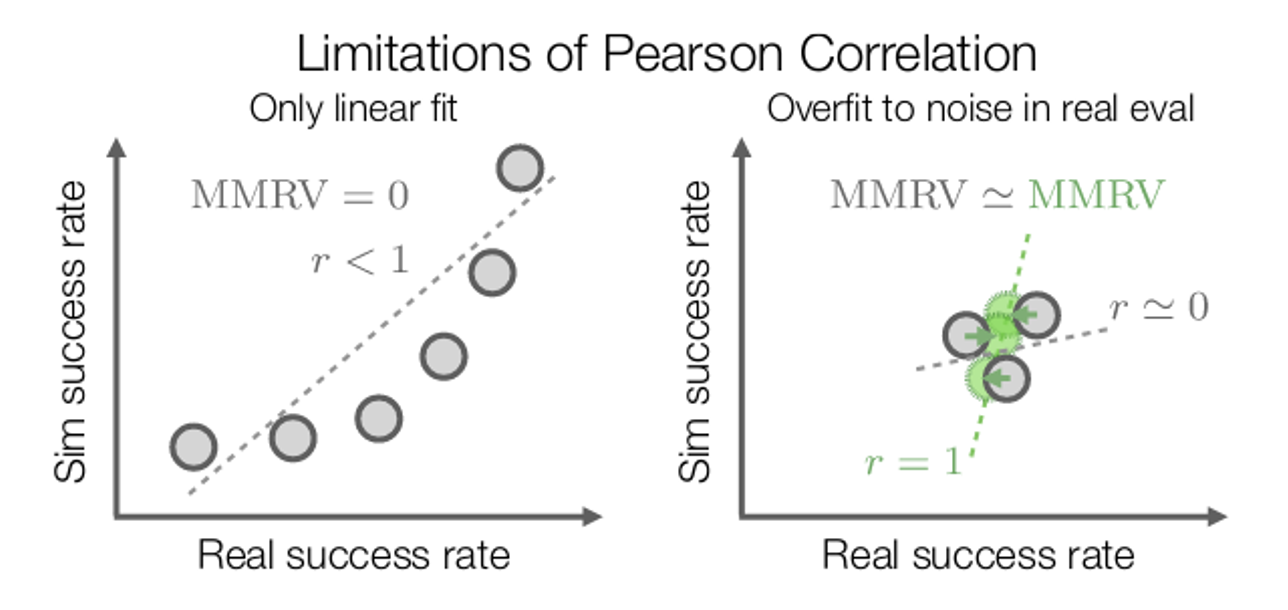

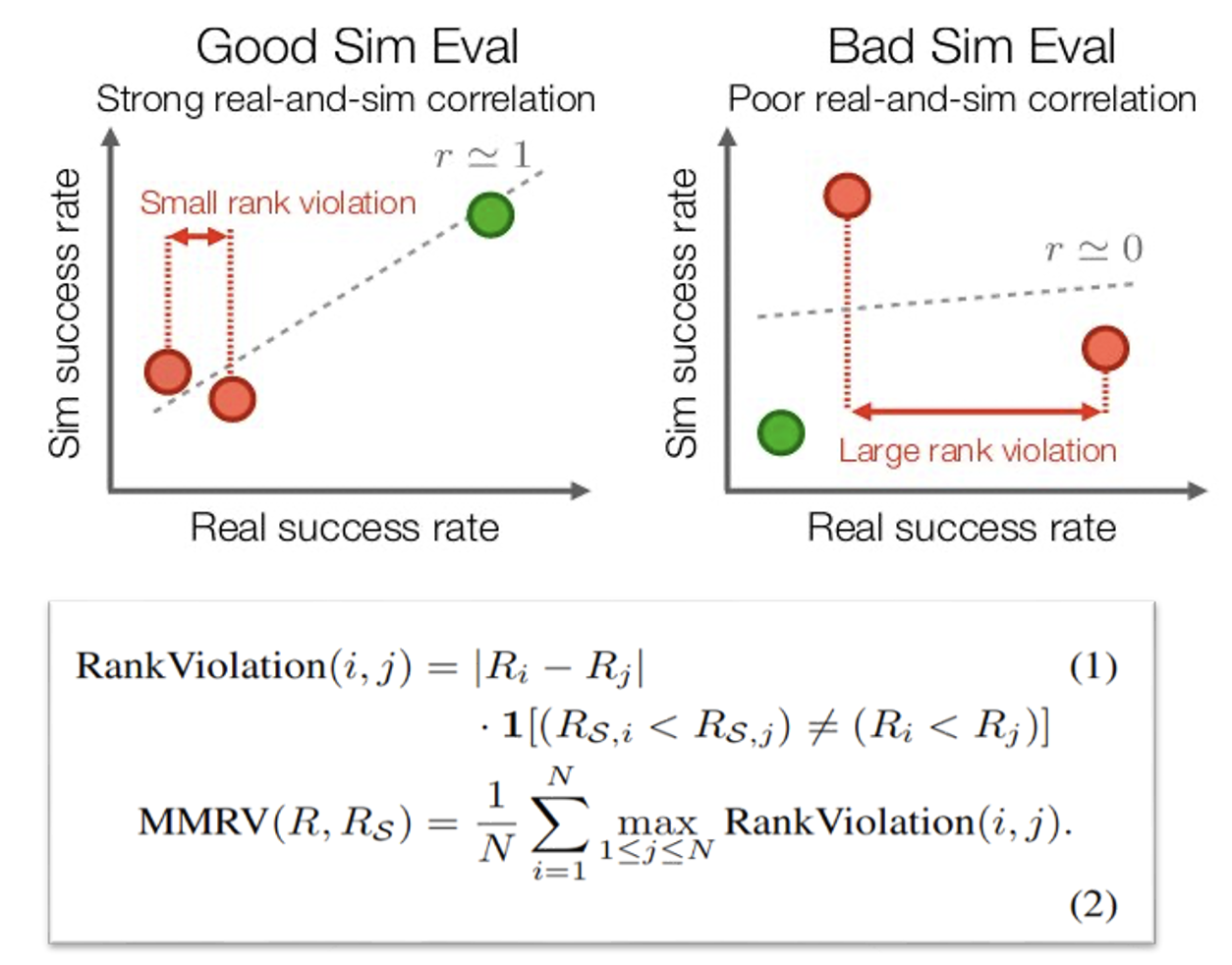

The paper propose two metrics to measure the performance in sim vs real:

- Pearson correlation coefficient (Pearson r)

- Mean Maximum Rank Violation (MMRV)

6. Challenges to building a real-to-sim evaluation system

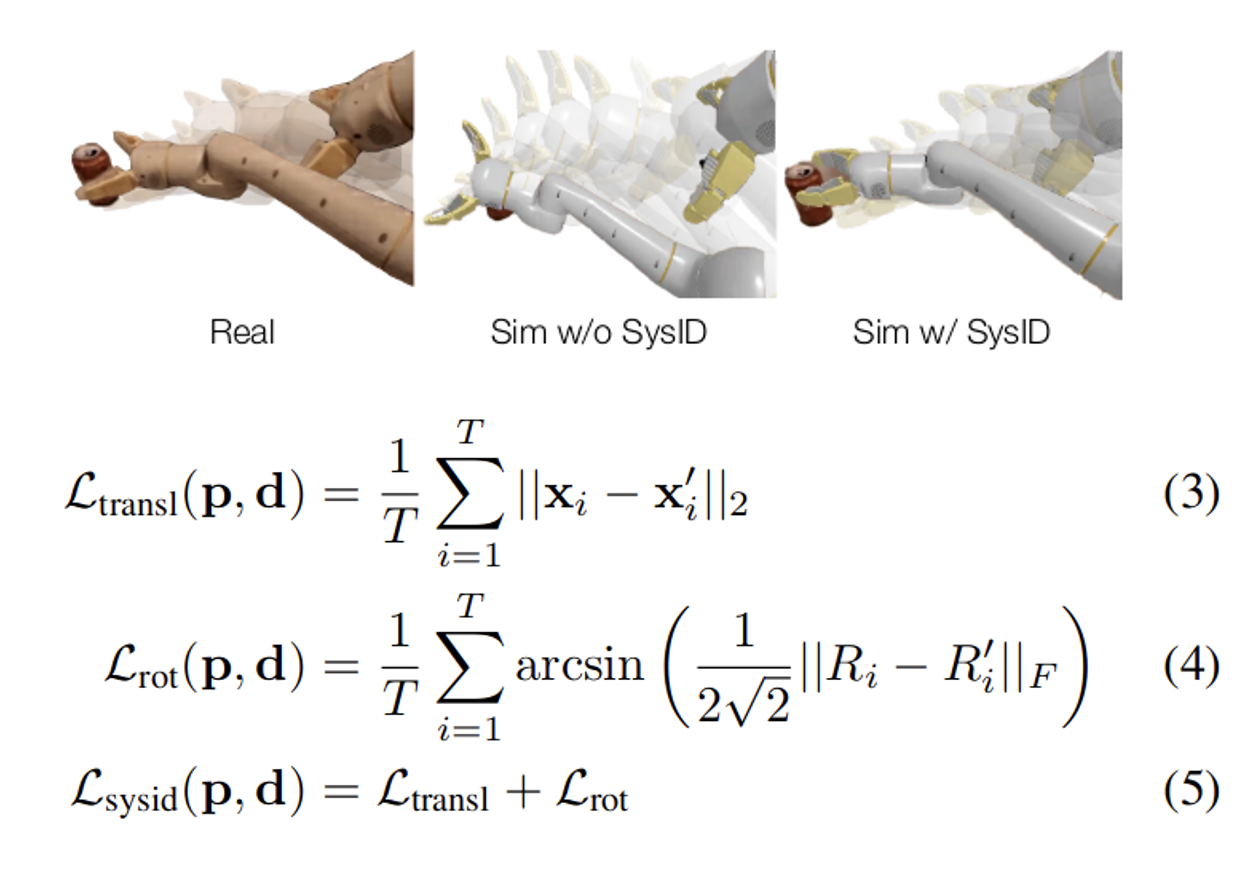

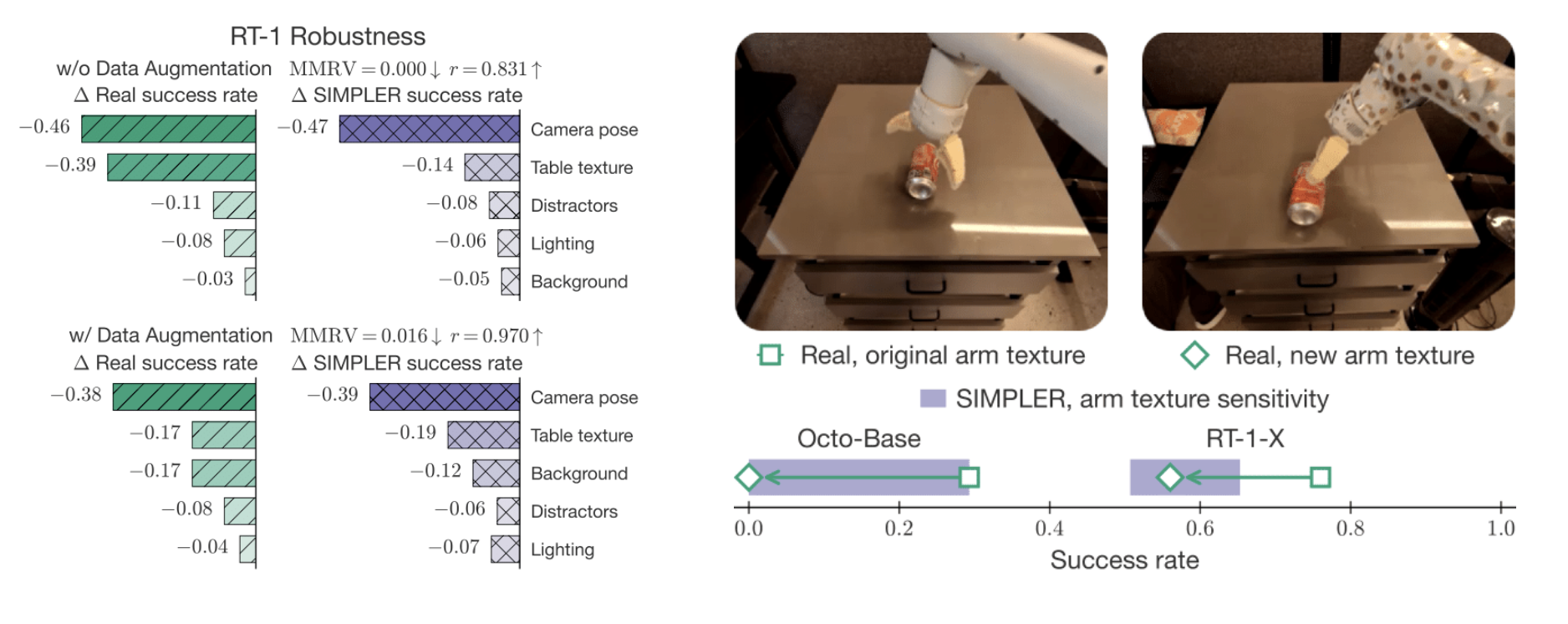

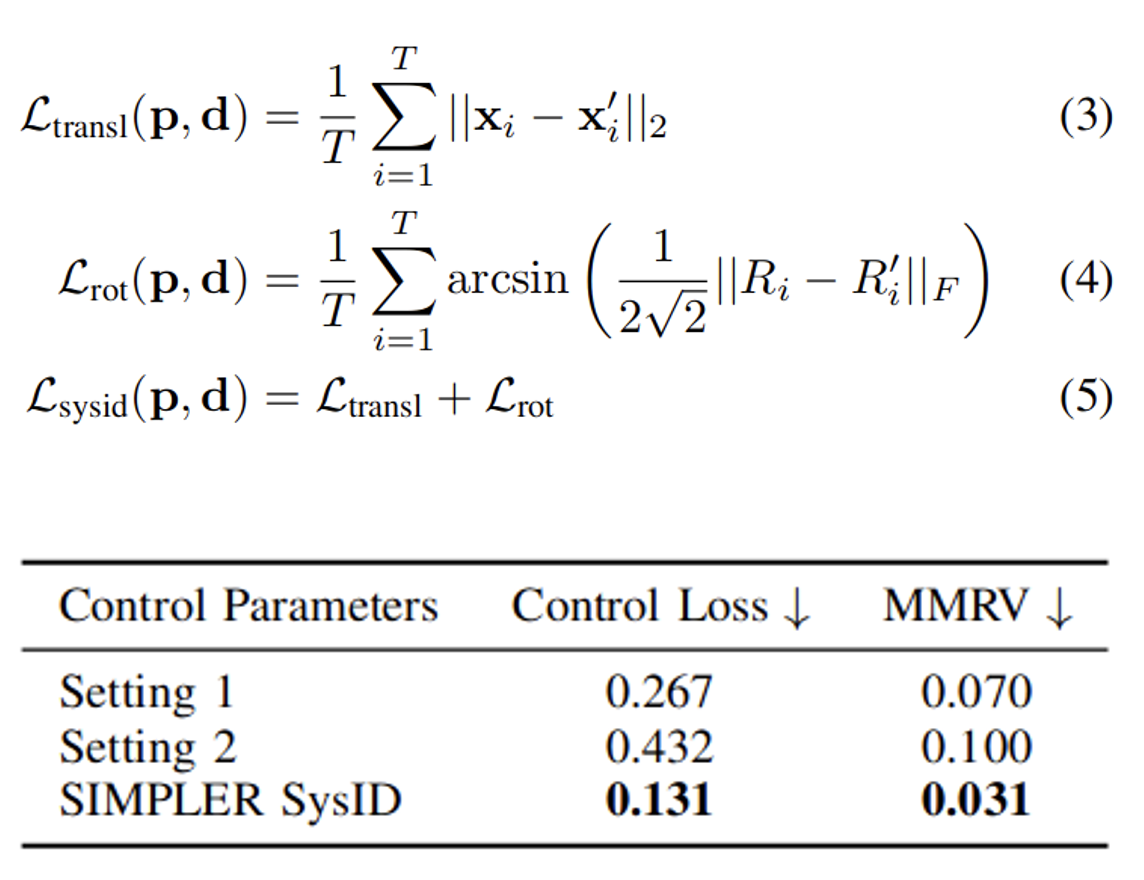

6.1 Mitigating the Real-to-Sim Control Gap - SysID (System Identification)

- Optimize P,D values for sim controller (stiffness, damping factors)

- Play a demo trajectory actions on both sim and real

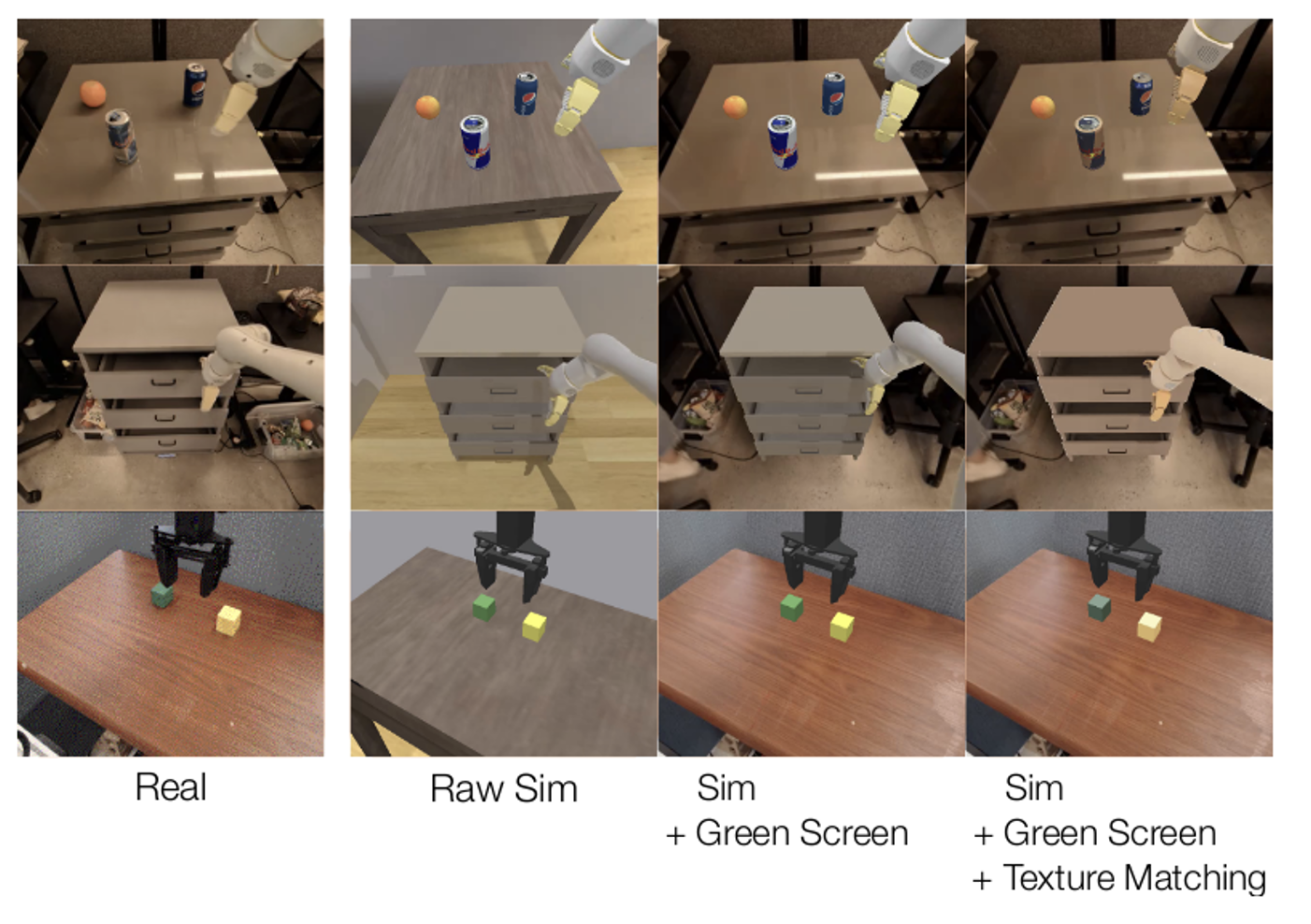

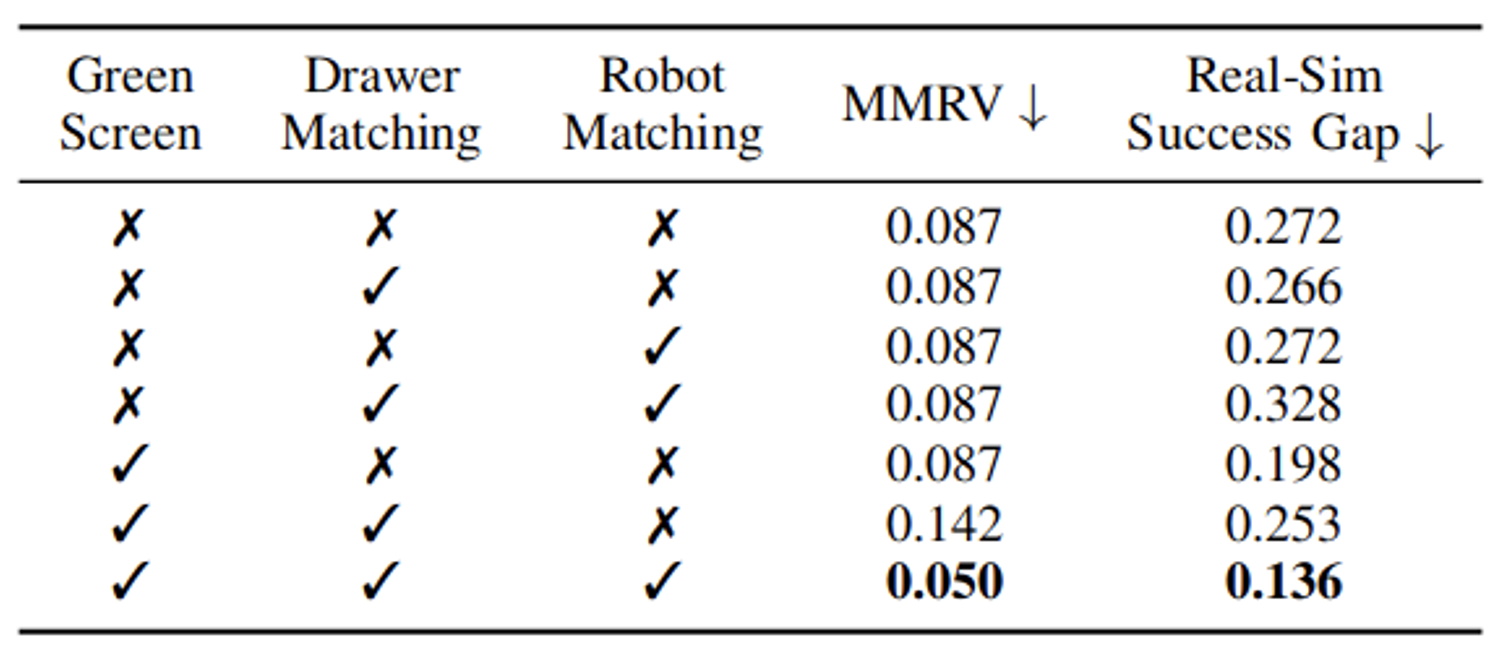

6.2 Mitigating the Real-to-Sim Visual Gap

The goal is to match the simulator visuals to those of the real-world environment with only a modest amount of manual effort using Green screening and Texture matching.

7. Simulation setup

Two manipulation setups are used for different tasks.

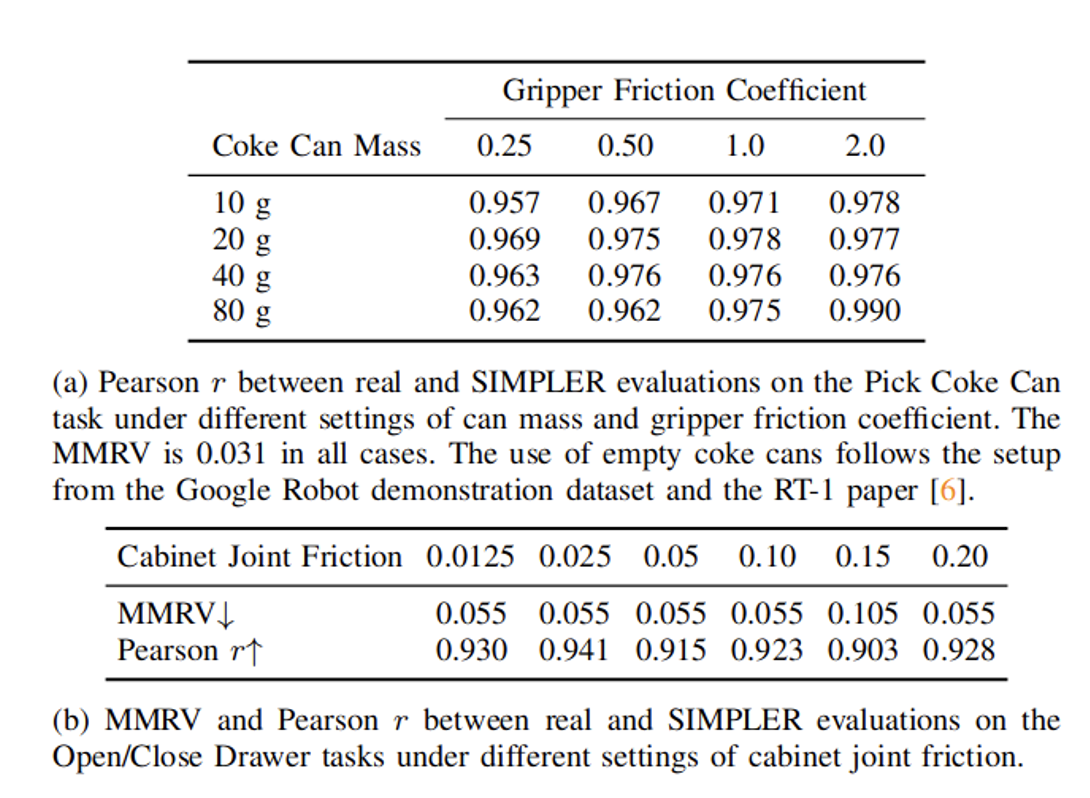

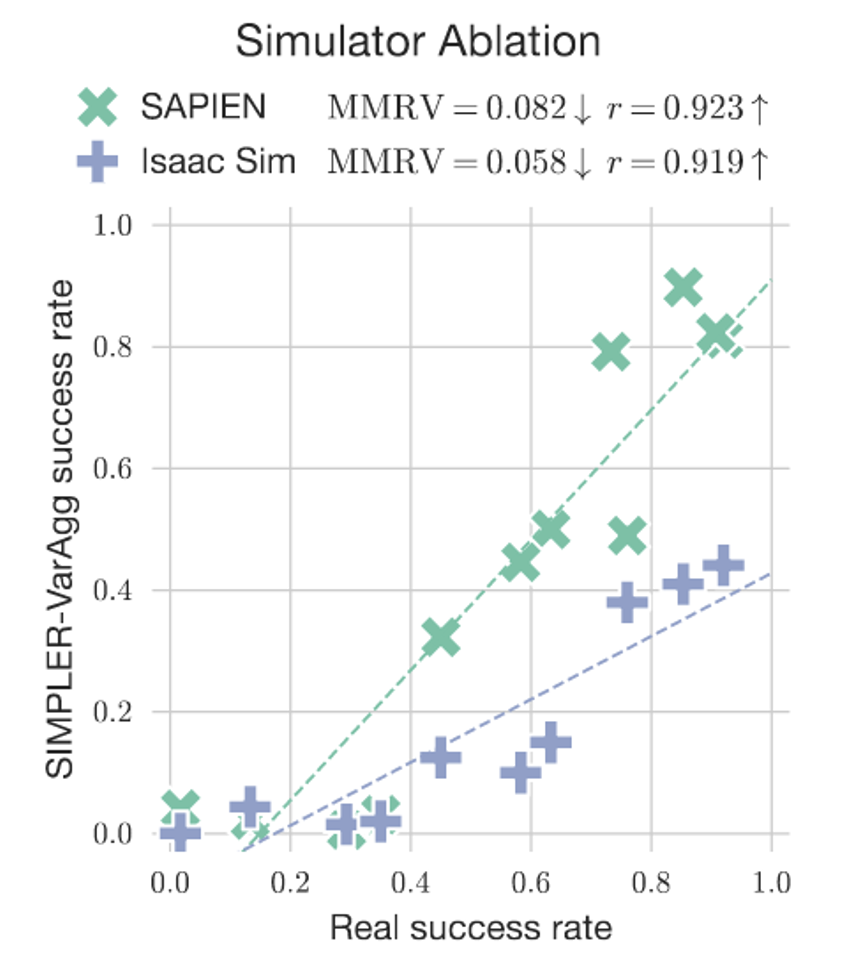

8. Investigations for simulation evaluations

The paper investigates the following key questions:

- Relative performances in sim and real

- Sensitivity to various visual distribution shifts

- Sensitivity to control and visual gaps

- Sensitivity to physical property gaps

- Does results extend to different physics simulator?

9. Experiment setup

They evaluate different open-source robot policies on the simulation setup described above. For google robot, four versions of robot arm and gripper colors used.

- RT-1 (Begin)

- RT-1 (15%)

- RT-1 (Converged)

- RT-1-X

- RT-2-X

- Octo-Base

- Octo-Small

10. Results

SIMPLER can be used to evaluate diverse sets of rigid-body tasks (non-articulated / articulated objects, tabletop / non-tabletop tasks, shorter / longer horizon tasks), with many intra-task variations (e.g., different object combinations; different object / robot positions and orientations), for each of two robot embodiments (Google Robot and WidowX).

10.1 Evaluating and comparing policies

10.2 Analyzing and predicting policy behaviors under distribution shifts

11. Ablations

12. Conclusion

- SIMPLER seems good proxy for real world policy evaluations

- Limitations

- No manipulation tasks with soft-objects

- No tasks with high motion dynamics

- Green screening

- Fixed cameras

- No shadows and visual details

- Manual effort in creating simulation evaluation environments is still high

13. BibTex

To cite this paper:

@article{li24simpler,

title={Evaluating Real-World Robot Manipulation Policies in Simulation},

author={Xuanlin Li and Kyle Hsu and Jiayuan Gu and Karl Pertsch and Oier Mees and Homer Rich Walke and Chuyuan Fu and Ishikaa Lunawat and Isabel Sieh and Sean Kirmani and Sergey Levine and Jiajun Wu and Chelsea Finn and Hao Su and Quan Vuong and Ted Xiao},

journal = {arXiv preprint arXiv:2405.05941},

year={2024},

}